Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

A lot of the hype around GenAI focuses on how it can be implemented to help create new content, which can be particularly useful for Marketing and Sales teams, but how can IT get in on the action?

There are many use cases for GenAI that IT teams can implement including:

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

GenAI is transforming the software and web development landscape by significantly increasing the speed and efficiency of coding tasks. Whether automating the creation of simple functions or helping developers with complex architectures, AI coding tools and copilots are becoming indispensable for IT engineering teams.

Accuracy is crucial in IT operations, as even small mistakes can lead to costly downtime or security vulnerabilities. GenAI can greatly decrease errors by automating complex, detail-oriented tasks that would otherwise be prone to human error. It can also increase efficiency in detecting operational and security issues, significantly reducing their negative impact.

Data is at the heart of IT operations, from monitoring network infrastructures to optimizing the IT user experience. Generative AI enhances data analysis by providing faster and more accurate insights than traditional methods, allowing for better decisions in real time.

However, while Generative AI tools are meant to boost the capabilities and productivity of IT teams, it cannot replace all human effort and due diligence. In fact, AI models can produce inaccurate or inappropriate results, and relying too much on GenAI may lead to overconfidence, where AI-generated outputs are not verified or validated.

Finding the right balance between automated AI output and human decision-making is key to effectively leveraging GenAI to boost productivity while ensuring quality control. While GenAI tools may work well to create an initial draft or work through specific problems, generated AI output still requires an extra set of “human” eyes.

To maximize the potential of AI and ensure output is consistent and of high quality, IT Teams must:

Clear standards and criteria for using generative AI tools must be created that indicate, for instance, when AI use is allowed, what data can be included, what the AI output expectations are, how to verify or modify AI results, and how to document AI usage.

IT Team members working with generative AI tools must receive training that sharpens their skills in using AI to its maximum potential while ensuring safety and effectiveness within established ethical limitations.

IT Teams should collaborate by sharing feedback, insights, suggestions, or corrections from using GenAI across processes, tasks and data. Working together will improve the use and quality of AI outputs, and increase the overall productivity of the team.

GenAI is rapidly becoming a game-changer for IT teams, with its capabilities to enhance accuracy in IT operations, transform data analytics, and significantly boost coding speed and efficiency. By automating tasks, generating code, and providing deeper insights into system performance and vulnerabilities, AI enables IT teams to work smarter, faster, and with greater confidence. And this is only the beginning. As GenAI technology continues to evolve, its integration into IT workflows will likely become a standard best practice, driving the future of IT innovation.

[/um_loggedin]Source: Protech Insights

The digital world is constantly evolving, and so are the threats it faces. One of the most pressing concerns today is the battle between artificial intelligence (AI) and cybercriminals. As AI technology continues to advance, it is being deployed to defend against cyber threats.

However, it is also being used by malicious actors to launch more sophisticated attacks. In this blog post, we will examine the ongoing battle between AI and cybercriminals and explore the potential implications for the future of cybersecurity.

AI has the potential to revolutionize cybersecurity by automating many of the tasks that are currently performed manually. For example, AI can be used to analyze large amounts of data to identify patterns and anomalies that may indicate a cyberattack. Additionally, AI-powered systems can be used to detect and block malicious traffic in real-time.

AI can analyze vast amounts of data to identify potential threats that human analysts may miss.

AI-powered systems can automatically block malicious traffic before it reaches its intended target.

AI can help organizations gather and analyze threat intelligence to stay ahead of cybercriminals.

Unfortunately, AI is not a silver bullet for cybersecurity. It can also be used by cybercriminals to launch more sophisticated attacks. For example, AI can be used to generate realistic phishing emails or to create highly convincing deepfakes.

Additionally, AI can be used to automate the process of scanning for vulnerabilities in networks and systems.

AI can be used to generate highly convincing phishing emails that are more likely to trick victims.

AI can be used to create realistic deepfakes that can be used for fraud, disinformation, or blackmail.

AI can be used to quickly identify vulnerabilities in networks and systems.

The battle between AI and cybercriminals is likely to continue for years to come. As AI technology continues to advance, it will become even more powerful and versatile. However, it is also likely that cybercriminals will find new ways to exploit AI for malicious purposes.

The future of cybersecurity will depend on the ability of organizations to effectively leverage AI while also mitigating the risks associated with its use. This will require a combination of technical expertise, policy development, and ongoing education and training.

The battle between AI and cybercriminals is a complex and ongoing struggle. While AI has the potential to significantly improve cybersecurity, it also poses new challenges. Organizations must be prepared to adapt to the evolving threat landscape and to invest in the necessary tools and resources to protect themselves from cyberattacks.

With the onset of the COVID-19 pandemic in 2020 that triggered an unprecedented global shift to remote work, many organizations rushed to upgrade their PC fleets to improve performance and reliability and enable secure remote access for their suddenly dispersed workforce.

Today, those same organizations are in the process of their next large-scale PC refresh, and the digital landscape has rapidly evolved in the last 4 years with the integration of AI technology across all aspects of business operations. This has raised many challenges for organizations and their tech infrastructures in meeting greater collaboration and security needs, providing improved tech integration, and increasing PC workload capacity. The new AI PC may offer the solution.

Gartner estimates 54.5 million AI PCs will be shipped by the end of 2024, and Intel has launched an AI PC Acceleration program that plans to sell 100 million AI PCs by 2025. Further, a recent IDC forecast expects AI PCs to account for nearly 60% of All PC Shipments by 2027.

Therefore, it’s strategically important that organizations consider the AI PC in their upcoming PC refresh if they want to stay competitive. Here we provide you with an overview of the AI PC and how it's transforming business operations.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

An AI PC is a personal computer equipped with hardware and software components to run artificial intelligence (AI) applications and tasks. So unlike traditional PCs, which primarily function as tools for running applications, managing data, and performing routine tasks, AI PCs leverage machine learning, natural language processing, and advanced analytics to redefine the speed, intelligence, and capabilities of today’s PCs. Let’s take a look at some of the key benefits in greater detail.

These benefits are just the beginning of what the AI PC can deliver, with future AI technology expected to greatly advance business capabilities. Below is a sample of how the AI PC can optimize and accelerate various business operations today.

As companies approach their next PC refresh cycle, the choice is no longer just about upgrading hardware for speed and performance. AI PCs represent a paradigm shift, offering capabilities that extend beyond traditional computing. By integrating AI into everyday tasks, these systems are set to transform how businesses operate, from boosting productivity to enhancing security and accelerating innovation.

For businesses looking to stay competitive in an increasingly digital world, adopting AI PCs is not just an upgrade—it's a strategic move toward a smarter, more efficient future with endless possibilities.

Want to learn more about how your organization can gain a competitive edge with your next PC refresh in AI PCs? Connect with a Knowledge Exchange expert for the latest AI technology insights, and get the support you need in making your next PC fleet purchase.

[/um_loggedin]Data privacy and protection, bias and discrimination, intellectual property rights, and climate change are among the concerns that must be addressed if we are to benefit from AI's advantages while minimizing its potential harm.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

One of the most pressing issues regarding AI ethics is the potential for bias and discrimination. AI systems are trained on data, and if that data is skewed by societal biases, algorithms can perpetuate or even exacerbate these inequalities.

Bias reduces AI’s accuracy and can lead to discriminatory outcomes, particularly in law enforcement, healthcare and hiring practices. To combat bias in AI proactively, developers must ensure that the data collected and used is diverse and representative.

Many organizations are incorporating AI governance policies and practices to identify and address bias, including regular AI algorithmic audits. Further, the implementation of the EU AI act (in effect as of August 1st, 2024), and the introduction of the Algorithmic Accountability Act of 2023 to Congress set clear rules and standards companies must abide by in AI development and use in order to limit bias and discrimination, as well as cover privacy rights. Additionally, resources to help mitigate bias across AI are now available, such as IBM Policy Lab’s suite of awareness and debiasing tools.

To build trust in AI, transparency and accountability are ethical implications that need to be addressed. Disclosing how and why AI is being used, as well as the ability to explain the decision-making process to AI users in an understandable way gives credibility to AI decisions, helps prevent AI bias, and enhances the acceptance and adoption of AI technologies across society. Transparency is particularly crucial in areas such as healthcare, finance, and criminal justice, where AI decisions significantly impact individuals’ lives and the public as a whole.

Many companies are creating their own internal AI regulatory practices by introducing techniques, tools and strategies to demystify AI decisions and improve transparency, making them more trustworthy. For instance, AI model interpretation can be applied using various tools to visualize the internal workings of an AI system to determine how it arrived at a specific decision, as well as detect and rectify biases or errors.

Establishing clear lines of accountability for AI actions and outcomes is essential for fostering transparency. Holding organizations, AI developers, and AI users accountable motivates them to provide understandable explanations about how their AI systems work, what data was used and why, and the decision-making processes. This promotes responsible and ethical AI development and deployment, which in turn improves the general public’s acceptance and adoption of AI technologies.

Some regulatory laws and requirements have already been developed to ensure AI ethical responsibility. In addition to the EU AI Acted noted earlier, there is:

As global collaboration on monitoring and enforcement measures for AI systems increases, more regulatory frameworks are expected in the future.

Due to AI’s collection of vast amounts of personal data, data privacy is another critical ethical consideration in the development and deployment of AI. While our lack of control over personal information has been growing since the beginning of the internet decades ago, AI’s exponential increase in the quantities of personal data gathered has heightened data privacy concerns.

Currently, there are very limited controls that restrict how personal data is collected and used, as well as our ability to correct or remove personal information from the extensive data gathering that fuels AI model training and algorithms.

The EU’s General Data Privacy Regulation (GDPR) and the California Privacy Rights Act (CPRA) both restrict AI’s use of personal data without explicit consent, and provide legal repercussions for entities that violate data privacy. Generally, though, the ethical implications of personal data collection, consent, and data security still depend significantly on each organization’s internal regulation leaving society to trust that they will do the right thing, often with only the risk of reputational damage as motivation.

When companies don’t establish internal ethical data practices in AI training, personal data risks can include:

To combat data privacy violations, some recommendations to future data privacy regulations include:

With its ability to create massive amounts of new output quickly that appears to be developed by humans, the benefits of generative AI are transforming creative industries. However, since AI-generated creative works are sourced from vast quantities of existing creative content, and due to the current proliferation of creative AI tools used, ethical and regulatory concerns about creative ownership have emerged.

The impact of AI on the job market is twofold: there are benefits such as increased productivity, economic growth, and the creation of new employment opportunities, but there are also significant concerns about the potential negative impact of job displacement due to AI technology.

AI technology is dually positioned to have a significant impact on the environment, as well as offer solutions to help alleviate climate change. Consequently, managing these contrasting effects will require AI companies and governments to ethically commit to driving sustainability in AI development and deployment.

As the use of AI technology continues to surge, so does its energy consumption and carbon footprint. In fact, due to AI’s energy-intensive computations and data centres, the power to sustain AI’s rise is currently doubling approximately every 100 days and will continue to increase, translating to substantial carbon emissions that directly impact climate change.

Government and AI companies must take action to reduce the effects of AI on the environment. Recommended steps towards sustainability include:

In contrast, the power of AI technology has the potential to offer climate change solutions. AI can assist in analysing climate data, optimizing energy usage, and enhancing renewable energy systems. AI contributions such as these to climate change mitigation and adaptation are necessary to counteract the impact of AI technology, and additional AI-supported solutions need to be explored further.

In conclusion, as AI continues to transform various facets of society, addressing its ethical implications becomes paramount. The challenges of bias and discrimination, transparency and accountability, privacy and data protection, intellectual property rights, job displacement, and environmental sustainability require comprehensive strategies to mitigate potential harm. Regulatory frameworks like the EU AI Act and the Algorithmic Accountability Act of 2023 are crucial steps towards establishing ethical boundaries. However, responsibility also lies with AI developers and organizations to self-regulate AI. By fostering an environment of ethical AI development, we can harness AI's extraordinary potential while safeguarding societal values and human rights. The path forward demands collaboration across sectors to create a future where AI serves as a tool for positive change, rather than a source of ethical dilemmas.

[/um_loggedin]Predictive maintenance uses advanced data analytics and proactive strategies to predict and address equipment issues before they cause breakdowns. This allows businesses to increase machine reliability, reduce costs, optimize resource allocation, and improve operational efficiency.

The advancement of Artificial Intelligence (AI) and Machine Learning (ML) has revolutionised predictive maintenance due to their scalability, adaptability, and continuous learning capabilities. This enables businesses to harness the power of data to make informed decisions about the maintenance cycle of their machines and devices.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

AI and ML enable predictive maintenance across industries such as manufacturing, automotive, and energy. It can be implemented to optimize machine maintenance with benefits including:

The goal of implementing predictive maintenance should be operational efficiency, reducing downtime, and financial savings. To successfully do so, there are some things you should take into consideration:

It is critical to integrate AI/ML predictive maintenance capabilities with existing maintenance management systems, enterprise resource planning software, and other operational technology. This enables companies to:

Implementing an AI-driven predictive maintenance model can pose certain challenges, particularly if moving from a reactive to a predictive maintenance strategy. Examples include:

As AI and ML technology evolve, there is huge potential for the sophistication of predictive maintenance solutions. In the future, we can expect the following:

In conclusion, machine-learning-driven predictive maintenance is quickly becoming indispensable for boosting operational efficiency across industries. With the power of AI and ML, businesses can not only predict equipment failures before they occur but also optimize maintenance schedules, save on costs, and minimize downtime. This proactive approach to maintenance, powered by data analytics and continuous learning capabilities, offers a smarter way to manage the life cycle of machinery and devices.

As we move forward, integrating IoT and cloud-based technologies with predictive maintenance systems promises to further enhance the effectiveness and responsiveness of these strategies. The challenge for businesses will be overcoming implementation hurdles such as data quality and legacy equipment to fully realize the potential of AI-driven predictive maintenance.

However, as technology progresses and organizations become more adept at navigating these challenges, the future of maintenance is set to be significantly transformed, emphasizing efficiency, sustainability, and cost-effectiveness.

[/um_loggedin]Source: Protech Insights

In the fast-paced world of technology, the fusion of artificial intelligence (AI) and cloud computing has given rise to a powerful paradigm known as “Serverless AI.” This innovative approach allows businesses to create smarter and more efficient applications without the complexities of traditional server management. In this blog, we will explore what Serverless AI is and how it’s transforming the landscape of application development.

Serverless AI combines the benefits of serverless computing and artificial intelligence. It enables developers to build AI-powered applications without the need to provision, manage, or scale server infrastructure. Instead, cloud providers handle the underlying infrastructure, allowing developers to focus solely on writing code and developing AI models.

Here are some crucial advantages of serverless AI:

Serverless AI follows a pay-as-you-go model, where you only pay for the computing resources used during the execution of your application. There are no upfront infrastructure costs, making it cost-efficient, especially for applications with varying workloads.

Developers can focus solely on coding application logic and AI model development, as the cloud provider manages the underlying infrastructure. This accelerates the development process and reduces time-to-market for AI-powered applications.

Serverless AI functions automatically scale in response to incoming requests or events. This ensures that your application maintains optimal performance, even during traffic spikes.

Serverless AI eliminates the need for server management, allowing development teams to allocate their resources to innovation and feature development rather than infrastructure maintenance.

Easily integrate AI capabilities into your applications using cloud provider services like AWS Lambda, Azure Functions, or Google Cloud Functions. This enables you to leverage AI for tasks like data analysis, image recognition, or chatbot development.

Serverless AI is well-suited for various applications, including:

While Serverless AI offers many advantages, it’s essential to consider the following challenges:

Serverless AI is reshaping the way applications are developed and powered by AI. It offers a cost-effective, scalable, and developer-friendly approach to integrating AI capabilities into your applications. By leveraging the advantages of Serverless AI, businesses can create smarter, more efficient, and innovative applications that enhance user experiences and drive digital transformation in various industries. As AI and cloud technologies continue to evolve, Serverless AI is positioned to play a pivotal role in the future of application development.

Source: Protech Insights

Artificial Intelligence is no longer a futuristic concept; it’s rapidly becoming an integral part of our everyday lives, including the way we work. As AI technology continues to advance, its impact on jobs and industries is profound, reshaping traditional roles, processes, and business models. In this blog, we’ll delve into how AI is revolutionizing the future of work, the opportunities it presents, and the challenges it poses.

One of the most significant impacts of AI on the future of work is the automation of routine, repetitive tasks. AI-powered systems and algorithms can perform tasks such as data entry, data analysis, and customer service more efficiently and accurately than humans. This automation frees up employees to focus on more strategic, value-added tasks, leading to increased productivity and innovation.

However, the automation of routine tasks also raises concerns about job displacement and the need for upskilling and reskilling. While some jobs may be eliminated due to automation, new opportunities will emerge in areas such as AI development, data science, and digital marketing, requiring workers to adapt and acquire new skills.

AI has the potential to augment human capabilities, enabling workers to perform tasks more effectively and efficiently. For example, AI-powered tools can assist healthcare professionals in diagnosing diseases, analyzing medical images, and developing personalized treatment plans. In industries such as finance, AI algorithms can enhance decision-making by analyzing vast amounts of data to identify patterns and trends.

By leveraging AI to augment human capabilities, organizations can improve decision-making, increase accuracy, and deliver better outcomes for customers and stakeholders. However, this augmentation also requires careful consideration of ethical and privacy implications, as well as the potential for bias in AI algorithms.

AI is driving significant transformation across industries, from manufacturing and retail to healthcare and finance. In manufacturing, AI-powered robots and automation systems are revolutionizing production processes, leading to greater efficiency and cost savings. In retail, AI-driven personalization and recommendation engines are enhancing the customer shopping experience and driving sales.

Similarly, in healthcare, AI is transforming how diseases are diagnosed, treatments are administered, and patient care is delivered. From predictive analytics to virtual assistants, AI-powered solutions are helping healthcare organizations improve patient outcomes, reduce costs, and enhance operational efficiency.

AI is opening up new opportunities for innovation and entrepreneurship, enabling startups and established companies alike to develop groundbreaking products and services. From AI-driven chatbots and virtual assistants to autonomous vehicles and smart cities, the possibilities are endless.

Innovation in AI also presents opportunities for job creation and economic growth. As demand for AI technologies and solutions grows, so too will the need for skilled professionals in AI development, data science, and related fields. By fostering an ecosystem of innovation and entrepreneurship, countries and organizations can harness the full potential of AI to drive prosperity and competitiveness.

While the future of work with AI holds great promise, it also presents significant challenges and considerations. These include concerns about job displacement, the ethical use of AI, data privacy and security, and the need for regulatory frameworks to govern AI development and deployment.

Addressing these challenges requires collaboration between governments, businesses, academia, and civil society to ensure that AI is developed and used responsibly and ethically. It also requires a commitment to lifelong learning and upskilling to prepare workers for the jobs of the future.

In conclusion, AI is poised to have a profound impact on the future of work, transforming industries, creating new opportunities, and driving innovation. While AI presents challenges, it also offers immense potential to improve productivity, efficiency, and quality of life. By embracing AI responsibly and proactively addressing its challenges, we can shape a future where humans and machines work together to achieve greater prosperity and well-being for all.

IT Sustainability has gained significant traction lately due not only to environmental concerns, but also as a way to boost operational resilience and financial performance. This has also been driven by The Corporate Sustainability Reporting Directive (CSRD) which came into effect on January 5th, 2023. The directive will require almost 50,000 EU companies to report their environmental impact starting in the 2024 financial year. They must also ensure all their suppliers comply within certain parameters of real data, reporting and due diligence to develop a sustainable supply chain.

It is imperative for companies to invest in sustainable IT to comply with regulations, cut costs and reduce environmental damage. And according to a recent study where 78% of customers say environmental practices influence their decision to buy from a company, investing in sustainability also makes excellent business sense. That’s why sustainability has been identified as a top-three driver of innovation and a primary consideration in IT procurement. In fact, a recent study of 3,250 IT decision-makers showed that 79% indicated at least half of future IT investments would be directed towards achieving their sustainability initiatives.

There are four key areas that enterprises can invest in to reach their sustainable IT goals; artificial intelligence, green computing, automation, and supply chain. This blog will detail how your business can also benefit from adapting more sustainable technologies.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

AI, in particular Generative AI, is already being used by companies to optimize a wealth of business activities. However, its role is also about to dominate the sustainability agenda.

Due to AI’s capacity to analyse vast quantities of data, it can be used to make informed decisions on energy use and efficiency, as well as measure and predict a number of other sustainable business needs.

AI is currently being used to deliver sustainability by:

And this is just a small sample of AI technology’s capacity in delivering sustainability solutions. To find out how each organization can benefit from AI in reaching sustainability goals, there are now a number of AI-powered sustainability platforms, such as IBM Garage, Microsoft Sustainability Manager, and Nasdaq Sustainable Lens, as well as AI reporting and decision support systems to help guide organisations in planning and achieving their IT sustainability transformation. -

IT operations currently contribute 5 - 9% of global electricity consumption, with this projected to increase to 20% by 2030. The cost of this is extensive greenhouse gas emissions that have a significantly negative environmental impact. To counteract the negative impact of IT operations on the environment, “green computing” will be fundamental.

Green computing is the adoption of IT practices, systems and applications that lower energy usage and reduce the carbon footprint of technology.

Taking a life-cycle approach that considers from procurement and design to operations and end-of-life disposal, here are three practices that incorporate the “Green Computing” trend.

Streamlining tasks with minimal human intervention, automation has seamlessly become part of all aspects of society, delivering efficiency and convenience. And in the business world, companies have incorporated automated systems to expedite processes, boost productivity and enhance efficiency in their operations; and this has also allowed sustainability benefits at scale.

In 2024, automation will continue to be leveraged in product design and manufacturing processes to improve accuracy, reduce waste and minimize the carbon footprint of companies. And specifically related to sustainability initiatives, automation will also make measuring, reporting and verifying emissions reductions more efficient and accurate through, for example, real-time monitoring of any environmental impact.

With supply chains accounting for 90% of the organisation's greenhouse gas emissions, supply chain management at companies will reach a new level of importance in light of the new EU sustainability reporting requirements mentioned earlier, and in effect from the beginning of 2024.

Not only will large EU companies and non-EU entities with substantial activity in the EU need to comply with established ESG reporting specifications, but these companies will be required to ensure all suppliers comply with certain parameters in order to develop a sustainable supply chain.

Therefore, companies will need to know how suppliers work environmentally in greater detail or be held accountable. Supply chain management, across all areas of a business including IT operations, will become more extensive as monitoring the sustainability of current suppliers and channel partners increases, and incorporating environmentally green criteria in new vendor selection and bidding is established. Actively seeking green IT partners and suppliers that comply with required EU sustainability goals will be the norm as procurement is optimized to consider the carbon footprint of all purchased materials and investments.

Whether in fulfilling the new CSDR requirements, searching for long-term operational efficiencies, or wanting to keep and attract customers that are choosing businesses based on their environmental practices, IT Sustainability must be a crucial part of your company’s framework. 2024’s Sustainable IT trends include investing in AI, green computing, automation, and a greener supply chain to reach your sustainability goals but are just the beginning in our journey to a greener business world.

Do you need guidance in getting started on IT sustainability? A Knowledge Exchange Key Account Manager can help you map your sustainability technology initiatives. Get in touch to learn more about how we can help.

[/um_loggedin]At the beginning of the year, Google sent out a message to users about changes to its policy in relation to a forthcoming “sensitive event;” the World Health Organisation (WHO) sent out warnings of a new Disease “X”, and the academics, billionaires, politicians, and corporate elites of the world gathered at the World Economic Forum (WEF) in Davos, Switzerland to under girder such warnings and throw in a few predictions of their own.

It must be an election year!

“Rebuilding Trust” was the theme of the annual WEF gathering which covered areas such as security, economic growth, Artificial Intelligence (AI), and long-term strategies for climate, nature, and energy. Knowledge Exchange previously reported, the explosion of Artificial Intelligence (AI) upon society presents both potential benefits and threats, including increased mis-information and disinformation that looks set to increase this year.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

The WEF warns that the benefits of AI, will only come to fruition if ethics, trust and AI, can all be brought together.

But the question of this statement to many observing the forum’s discussions is: “Who’s ethics and trust of who?

This question of trust and ethics is also particularly poignant for other observers of WEF forums when discussions at Davos moved towards the potential combination of AI with biotech and nano technology.

Trust is certainly an important commodity for a world that is still barely crawling out of a pandemic, which has also seen a rapid rise in misinformation, disinformation and censorship around public health, climate, politics, economy, and war. This may make it difficult for people to rebuild trust in institutions, including think tanks like the WEF and other bureaucratic organisations.

But can we stop the rampant AI empowered digital transformation in our personal and business lives that will increasingly make Moore’s law look like glacial progression? Especially when the calls for a pause1 in AI development seem to have fallen on deaf ears.

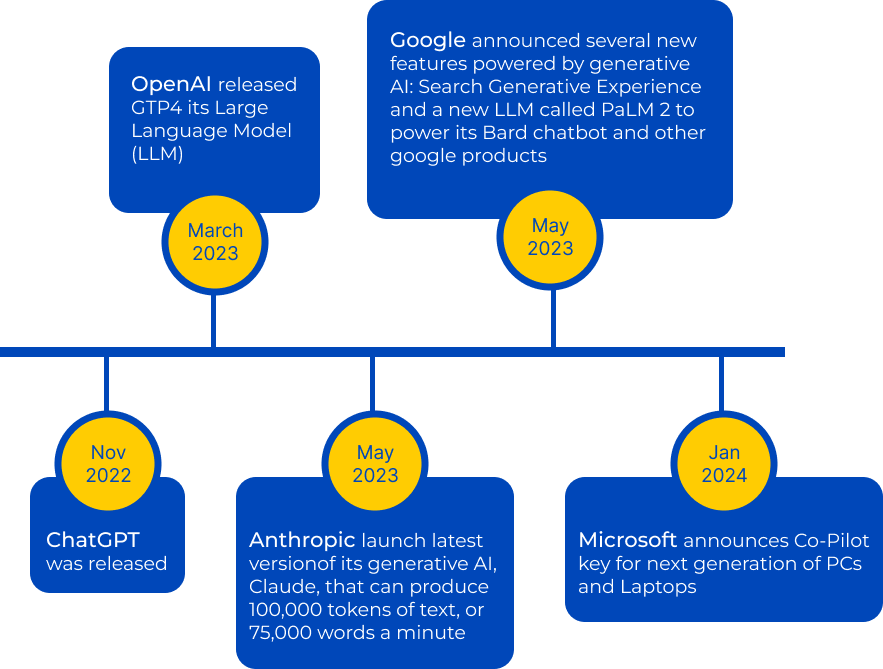

It seems not. After a slew of announcements and releases last year, IT giants such as Google and Microsoft continue to duke it out in the AI arms race in 2024 by announcing plans to integrate AI functionality into search, office productivity software, and operating system level. Developments such as the introduction of a Microsoft Co-pilot key for certain laptops and desktop computers ramps up the hard integration of AI into the silicon of CPUs, GPUs, NPUs of PCs and servers in the cloud.

With analysts such as McKinsey predicting that Generative AI will “add the equivalent of $2.6 to $4.4 trillion of (Global) economic value annually”, who wouldn’t want to get a slice of the AI action?

However, McKinsey did also quite rightly state that like in other ‘generational’ advancements in technology, turning the promise into sustainable business value are sometimes difficult to get past the pilot stage. CIOs and CTOs have a “critical role in capturing that value” according to its Technology’s generational moment with generative AI: A CIO and CTO guide that states:

“Many of the lessons learned from [early developments in internet, mobile and social media] still apply, especially when it comes to getting past the pilot stage to reach scale. For the CIO and CTO, the generative AI boom presents a unique opportunity to apply those lessons to guide the C-suite in turning the promise of generative AI into sustainable value for the business.”

But it also comes at a cost, especially in terms of human employment, with industries lead by media and entertainment, banking, insurance and logistics looking likely to be hit the hardest, according to a poll of top directors by the FT.

"Whenever you get new technologies you can get big changes," said Philip Jansen, Chief Executive of BT who spoke to the BBC about the 55,000 jobs it intends to cull by the end of the decade- with a fifth of those being replaced by AI.

Because Generative AI tools can imitate humans in conversational chat, solving problems and writing code, Jansen added that AI would make BT´s services “faster, better and more seamless.”

According to a survey of 750 business leaders using AI by ResumeBuilder “1 in 3 companies will replace Employees with AI in 2024” it also cites that 44% of companies surveyed say AI will lead to layoffs in 2024, 53% of companies use AI, with a further 24% planning to start in 2024

With such alarming cuts like this year’s Google AI layoffs, Onur Demirkol of Dataconomy believes:

“The tech industry is navigating a transformative period where AI is increasingly automating tasks traditionally handled by humans.

As we see these changes, the industry is adapting to innovative technologies and facing critical questions about the implications on employment and the broader workforce landscape.”

Although proponents of AI development probably dismiss dystopian claims about machines running amok like in The Terminator or a society in perpetual surveilled panopticon2 as envisaged by George Orwell in 1984, there are alarming signs of such technology insidiously being introduced into society. This can be either in guise of entertainment, such as the Metaverse or in the name of public safety such as the New York Police Department’s Digidog, that was rereleased last year. GPS trackers and Autonomous Security Robot all have the potential to link to AI technology to detect, track, discriminate and engage “targets.”

And while Robocob might be still some years off, it would seem that group think narratives spiked by a cocktail of AI and algorithms to prepare society for such events such as Disease X, current and future wars, and issues surrounding climate change are already at work. With growing instances of deepfakes and misinformation, narratives are being pushed through ephemeral persuasion via search and social media. And not only high-profile celebrities, sports people or politicians are being targeted. Such tactics in the wrong hands can affect businesses or people’s reputations, like impersonating the CEO via an email or using deepfakes to discredit certain individuals that are opposed to a certain viewpoints or narratives and something that CIOs and CTOs need to be on the watch for and alert staff about.

Copyright and Intellectual property are also areas where AI could get companies into trouble as they increasingly look to AI to help in copywriting and marketing activities. For example, creating art using an AI platform like DALL-E or MidJourney may seem miraculous and tremendously impressive, questions are being asked what sources have been used to train these platforms and if they had permission to use them. Stable Diffusion found out the hard way when it received papers from Getty Images for copyright infringement.

If a company then creates an image to use in marketing or on the Web using a similar AI platform, theoretically another company like Getty could argue that this is also copyright infringement and sue that company using the AI platform as well.

In other areas, what happens when employees start uploading corporate presentations, technical designs, or white papers or even code into AI platforms to give them a bit more zing, what then happens to the information that is uploaded? And could a potential competitor use this information for their own gain? E.g. I am sure the designers at Mercedes F1 would love to use AI to design a car like Adrian Newey’s Red Bull.

What companies need to remember is that Millennials now occupy over half of those that have IT buying decisions. Compared to their Gen Z colleagues, Millennials are more digitally native as they grew up in an increasingly online and digitally enabled world, which is now quickly changing the way companies buy technology.

This means that influencing, rather than hard selling, is something that has bled over from the B2C world into B2B purchasing and this is a key factor in so many companies looking to invest in AI. Companies want to invest in solutions that can help them have the conversational interaction of humans, but also the analytical, predictive and processing power of machines that can capture intent signals, as well as to steer conversations in the right direction or to suggest solutions that might fit customer needs. If there is greater accuracy, transparency, and accountability via regulation there will be more trust in rolling out AI systems and that may involve more input and supervision of AI systems by human knowledge workers than less.

Pat Gelsinger, CEO of microprocessor and AI giant Intel, believes that it is fundamentally all about improving the accuracy of AI’s results. In an interview with US cable broadcaster CNBC, he said “This next phase of AI, I believe, will be about building formal correctness into the underlying models.”

“Certain problems are well solved today in AI, but there’s lots of problems that aren’t,” Gelsinger said. “Basic prediction, detection, visual language, those are solved problems right now. There’s a whole lot of other problems that aren’t solved. How do you prove that a large language model is actually right? There’s a lot of errors today.

Gelsinger believes although AI will improve the productivity of the knowledge worker, the knowledge worker or human still has to have confidence in the technology.

Trust is especially important for the IT industry whose technology is seen as the key not only to the riches of the AI castle, but also general day to day systems that people rely on for their day to day lives.

In January of this year, ITV’s docudrama, Mr. Bates vs The Post Office captured the attention of the UK public as it detailed the scandal surrounding the Horizon IT system which has been rumbling for the last 20 years. Originally introduced to eliminate paper-based accounting, over 900 UK Sub postmasters3 were the victims of what some commentators are calling he biggest gross miscarriages of UK justice that saw many wrongfully convicted of theft or fraud, some jailed and all smeared due to the ultimately faulty IT system.

Back then before cloud and AI, human intelligence was responsible for large scale IT roll outs and open to human error in software coding.

And it has taken 20 years of campaigning, questions in Parliament, investigations by publications such as Computer Weekly and finally a TV show to shine a light on a faulty IT system that was introduced in 1999, in order for the victims to finally get justice.

Sadly, some of the wrongly accused committed suicide or did not live to see the promised reparations, overturned convictions, and the clearing of their names they all deserved. Many cases are still sub judice.

What was galling for many viewers of the dramatization was the fact that some insiders at the Post Office allegedly knew there were issues with the system such as the ability to remote log into it, but continued to promote the misinformation that the system could only be accessed by the local operator and thus any errors in the accounting were solely down to the (human) Postmaster, which as it turns out was not true.

In this case, it appears to have been the humans running the Post Office covering up of the Horizon IT system errors that were to blame. But when AI, that can already successfully code language like C++, gets to the stage of being trusted enough to code sensitive medical, military, or other machinery that interacts with human ‘nodes’, will AI become more difficult to question and hold accountable, when machines are running the show?

And worse still with the introduction of AI into law enforcement or military machines, will lethal decisions be made in error? Will AI systems, like the HAL 9000 computer 2001: A Space Odyssey, think they are:

“By any practical definition of the words, foolproof and incapable of error.”

As we face a new era in artificial intelligence, the rapid development of AI is underscored by the critical need for a balanced and ethical approach to said development.

There is an undoubtable need for robust ethical frameworks, transparent practices, and proactive governance to harness the transformative power of AI while mitigating its risks. It is imperative for stakeholders to collaborate to ensure AI serves as a force for good, and that society fosters an innovative, while still equitable and ethical outlook in the march toward a technologically advanced future.

[/um_loggedin]A common misconception about artificial intelligence is that it is expensive, complex, or requires technical expertise to put into practice. In reality, SMEs can integrate low risk, affordable, easy-to-customise AI tools and platforms that gives them the opportunity to level the playing field with larger enterprises.

While there are a vast number of AI tools that can help SMEs maximize their efficiency and productivity, here are three areas of your business where you can integrate AI and see benefits to your business right away.

Employing AI chatbots and customer service automation allows SMEs to enhance their CX by delivering more efficient and satisfying customer service, which in turn generates customer loyalty and drives business growth. AI also allows SMEs to scale their customer engagement and free up resources needed for more critical customer interactions with tools such as:

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

SMEs can benefit from AI tools that execute machine learning to automate and enhance a variety of marketing functions such as market research, branding, and content generation. AI-based marketing tools can:

I can increase efficiency within your business by optimising processes. For example, it can be used to:

This is just the tip of the iceberg when it comes to integrating AI technology into your business. As AI continues to evolve and deliver more capabilities, it is essential for SMEs to embrace it to increase efficiencies, enhance productivity, drive growth, and remain competitive.

To implement AI strategically, begin by identifying areas where AI can add the most value to your SME, then research and choose the right tools, and continue to stay up to date with the latest AI advancements to scale up your business. If you are looking for help developing a robust AI strategy, Knowledge Exchange's Key Account Managers can support you and connect with the right providers. Get in touch today.

[/um_loggedin]Source: Protech Insights

In recent years, the financial industry has been undergoing a digital revolution, with emerging technologies reshaping the way businesses operate and interact with their customers. One such technology that is making significant waves in the finance sector is ChatGPT, a language model powered by artificial intelligence. ChatGPT, developed by OpenAI, has demonstrated its transformative potential in various fields, and the finance industry is no exception. This article explores the role of ChatGPT in finance business and the benefits it brings to financial institutions and their customers. AI in finance can be extremely powerful when optimized correctly.

Here are some essential points to know about the role of ChatGPT in finance business:

Customer service is paramount in the finance industry, and ChatGPT has emerged as a valuable tool for enhancing customer interactions. With its natural language processing capabilities, ChatGPT in finance can engage in conversations with customers, answer their queries, and provide personalized assistance. This technology enables financial institutions to offer round-the-clock support and improve customer satisfaction. Whether it’s addressing account inquiries, explaining financial products, or assisting with basic transactions, ChatGPT can handle a wide range of customer service tasks efficiently.

Investment and financial advisory services often require complex analysis and expert guidance. ChatGPT can be trained to understand financial concepts, analyze market trends, and provide insightful recommendations to investors. It can assist customers in understanding investment options, portfolio diversification, risk assessment, and other financial planning aspects. By leveraging ChatGPT in finance, financial advisors can provide timely and accurate information, helping clients make informed decisions and achieve their financial goals.

The finance industry is heavily regulated, and compliance with laws and regulations is of utmost importance. ChatGPT can play a vital role in ensuring compliance and managing risks. It can be trained to understand regulatory frameworks and provide guidance on compliance-related matters. By using natural language processing capabilities, ChatGPT can review and analyze vast volumes of financial data, identifying potential risks and anomalies. This helps financial institutions detect fraud, money laundering, and other illicit activities more efficiently, contributing to a safer and more secure financial ecosystem.

Streamlining operations and reducing manual effort is a priority for finance businesses. ChatGPT can automate routine tasks, such as data entry, report generation, and document processing. This automation frees up valuable time for finance professionals to focus on more strategic activities. By integrating ChatGPT with existing systems and workflows, financial institutions can achieve greater operational efficiency and cost savings.

Data analysis is a crucial component of finance business decision-making. ChatGPT can assist in analyzing large volumes of financial data, identifying patterns, and generating insights. It can provide real-time information on market trends, customer preferences, and investment opportunities. By leveraging ChatGPT’s analytical capabilities, financial institutions can make data-driven decisions, mitigate risks, and capitalize on emerging market trends.

While ChatGPT offers numerous benefits, there are challenges and considerations to address. One of the primary concerns is ensuring data privacy and security. Financial institutions must implement robust security measures to protect customer information and prevent unauthorized access. Additionally, careful training and monitoring of ChatGPT are necessary to avoid biases and ensure accurate responses. Financial professionals should exercise caution when relying solely on ChatGPT recommendations and consider them as supplementary information rather than definitive advice.

ChatGPT is transforming the finance business by enhancing customer service, improving financial advisory, streamlining compliance and risk management, enabling process automation, and enhancing data analysis. Financial institutions that leverage this technology can gain a competitive edge, deliver better customer experiences, and make more informed decisions. However, it is crucial to address security and privacy concerns and exercise human oversight to ensure the responsible use of ChatGPT in the finance industry. As technology continues to advance, ChatGPT is poised to play an increasingly significant role in shaping the future of the finance business.

The EU's landmark AI Act gained momentum last week as negotiators from the EU parliament, EU commission and national governments agreed rules about systems using artificial intelligence. The proposal will be passed in the new year by the European Parliament, but what does it mean for the AI technological revolution?

The draft regulation aims to ensure that AI systems placed on the European market and used in the EU are safe and respect fundamental rights and EU values. This landmark proposal also aims to stimulate investment and innovation on AI in Europe.

“This is a historical achievement, and a huge milestone towards the future! Today’s agreement effectively addresses a global challenge in a fast-evolving technological environment on a key area for the future of our societies and economies. And in this endeavour, we managed to keep an extremely delicate balance: boosting innovation and uptake of artificial intelligence across Europe whilst fully respecting the fundamental rights of our citizens.” – Carme Artigas, Spanish secretary of state for digitalisation and artificial intelligence.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

The aim of the act is to ensure the responsible development and deployment of AI technologies, and it addresses key concerns such as risk, transparency, and ethical use of AI.

AI technology will fall into one of four risk categories – Prohibited, High-Risk, Limited Risk and Minimal Risk – based on the intended use of the system. Examples of High-Risk AI include systems used in sectors such as healthcare, transportation, and the legal system where AI’s impact could have greater consequences.

Any high-risk AI system will have to pass a fundamental rights impact assessment before it is put in the market. It will also oblige certain users of such systems to register in the EU database for high-risk AI systems. There are also provisions for lower risk systems which such as disclosing that content is AI generated to allow users to make more informed decisions.

In order to protect individual privacy, the act proposes strict limitations on the use of biometric identification and surveillance technologies by law enforcement and public authorities, particularly in public places. Such systems will be subject to appropriate safeguards to respect the confidentiality of sensitive operational data.

In addition to determining the risk of a system using AI, the act also sets out cases where the risk is deemed unacceptable and therefore such systems will be banned entirely in the EU. Examples of such applications include cognitive behavioural manipulation, the untargeted scraping od facial images from the internet or CCTV footage, emotion recognition in the work place and educational setting, social scoring, biometric categorisation and some cases of predictive policing.

The initial proposal by the European Commission was substantially modified in order to create a legal framework designed to support the innovation of AI systems in a safe and sustainable manner.

It has been specified that AI regulatory sandboxes, designed to create a controlled setting for the development, testing, and validation of cutting-edge AI systems, should permit the testing of these systems in actual real-world scenarios under specific conditions and safeguards. To ease the administrative load for smaller companies, the provisional agreement outlines a set of measures to support such operators and incorporates limited and clearly defined exceptions.

The Act also sets out potential penalties for any company in violation of the legislation which is set as a percentage of the company’s global turnover or a fixed amount, whichever is higher. There will more proportionate caps on administrative fines for SMEs and start-ups. The penalties are as follows:

AI has revolutionized many aspects of human life, both in personal and professional aspects, and has been a trending topic globally. The AI Act will be finalised in the coming weeks and will come into effect in 2025, with some exceptions for specific provisions. It comes at a time when AI’s development has been described as over-hyped in the short term and underestimated in the long run. The EU is the first governing body to address the concerns, challenges, and opportunities of AI with legislation and it could set a precedent for other nations to follow suit.

[/um_loggedin]This month’s Knowledge Exchange will examine both the benefits and the potential dangers of unregulated Artificial Intelligence on Enterprise resourcing, business IT platforms, sales and marketing strategies and also on customer experience. It will also ask if it is possible to pause AI development to roll out ethical and regulated AI that protects and enhances jobs rather than potentially replace them; protects personal data, privacy, and preferences rather than manipulate it for nefarious reasons and that doesn’t spiral us into a “Terminator” or “Matrix” like future where the machines are in control!

As we discussed in last month’s Knowledge Exchange on Hybrid Cloud, Artificial Intelligence (AI) has some compelling usages for ITDMs especially when it can help tame IT complexity by automating repetitive and time-consuming tasks. It can also be used to learn and write code from past data and be used to autogenerate content and images from multiple sources by mimicking human intelligence and human labour. As the technology develops, there seems to be a whole raft of plug-ins and algorithms that people such as Microsoft’s chief executive, Satya Nadella believes will: “Create a new era of computing.”

Copilot (sic) works alongside you, embedded in the apps millions of people use every day: Word, Excel, PowerPoint, Outlook, Teams, and more…it (sic) is a whole new way of working.

Microsoft 365 head I Jared Spataro

And as corporations and investors are constantly looking at growth, efficiencies and ultimately profit, the lure of AI to support this new paradigm must be insatiable proposition right now, especially as we are seeing a lot of economic pressure from various financial, energy and geopolitical crises.

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

This is perhaps why, as news service Reuters notes, since the March 2023 launch of Microsoft’s AI “Co-pilot” developer tool, over 10,000 companies have signed up to its suite that can help with the generative creation and optimisation of its Office 365 Word, Excel, Powerpoint and Outlook email software that some commentators are calling “Clippy on Steroids”

Speaking to Senior editor of the Verge, Tom Warren, Microsoft 365 head Jared Spataro said: “Copilot (sic) works alongside you, embedded in the apps millions of people use every day: Word, Excel, PowerPoint, Outlook, Teams, and more…it (sic) is a whole new way of working.”

Microsoft has also invested $1bn into former non-profit, OpenAI a company co-founded by billionaire inventor and investor, Elon Musk who has since left the company to concentrate on his Tesla automotive and SpaceX Aeronautical business. Now a for-profit business, OpenAI, is working on an Artificial Generative Intelligence (AGI) to perform like a human brain.

Microsoft and OpenAI will jointly build new Azure AI supercomputing technologies. OpenAI will port its services to run on Microsoft Azure, which it will use to create new AI technologies and deliver on the promise of artificial general intelligence. Microsoft will become OpenAI’s preferred partner for commercialising new AI technologies,

Microsoft 365 head I Jared Spataro

The partnership will offer Microsoft the chance to not only catch up with Google’s AI developments of late, but also give it more muscle for AI development due to its combination of resources and hardware combined with the researchers and developers at OpenAI, according to industry watcher, TechGig it noted:

“Microsoft and OpenAI will jointly build new Azure AI supercomputing technologies. OpenAI will port its services to run on Microsoft Azure, which it will use to create new AI technologies and deliver on the promise of artificial general intelligence. Microsoft will become OpenAI’s preferred partner for commercialising new AI technologies,” according to an official Microsoft statement.

Back in November 2022, OpenAI launched its much lauded and controversial text generator ChatGPT, ahead of rivals Google that launched its rival Bard text generator product later in February 2023. Until then, Google had been largely considered at being ahead of its rivals by integrating AI tools into products such as its search engine, something Microsoft also started to implement into its Bing search engine in February. But unlike ChatGPT, Bard is still not available or supported in most European countries.

As the AI ‘arms’ race heated up in April this year, Google launched a raft of tools for its email, collaboration and cloud products, according to Reuters, that reported Google had combined “Its AI research units Google Brain and DeepMind and work on “multimodal” AI, like OpenAI’s latest model GPT-4, which can respond not only to text prompts but to image inputs as well to generate new content.”

And there certainly seems a tsunami of AI development projects that are either in development or planned to be rolled out from seemingly all collaboration, content, cloud, data and security vendors that can see the potential that AI could do to enhance current offerings and workflows. As we examined in March’s Knowledge Exchange whitepaper, adding AI to Cybersecurity applications to prevent increasing vulnerability and sophistication of attacks, including AI generated malware, was one of the main hopes and priorities of ITDMs for 2023 and beyond.

In other applications of AI, from a customer user experience point of view, having the ability to generate not just content but localised or personalised content is also very attractive for companies to generate Website content, emails and sales and marketing communications, because they don’t require hundreds of content and digital specialists to create it. For example, in the UK, Press Association (PA), has recently launched its Reporters And Data and Robots (RADAR) service to supply local news media with a mixture of journalist generated content and AI generated content to supplement their local coverage.

This venture is beneficial for local areas that have seen local news coverage drop in favour of centralised national news coverage and shines a light on how AI and Human intelligence can be combined to create quality content. And from a marketing point of view, the ability to generate personalised emails by AI using data from multiple sources would seem like discovering the Holy Grail. Imagine a scenario where AI looks at intent data from a customer Ideal Customer Profile list to see who is currently in market for a product or service by analysing what content a company or individual might be consuming. Using some predictive analysis, autogenerated content could be used to nurture those companies or individuals to a point where more predictive analysis could be deployed to analyse where they are in the purchasing process and create content accordingly. Or it could be used send invitations to follow up with a sales person or drive people to a website where there are AI enhanced chatbots that can gather yet more information about a product or service requirement in more of a conversational style, as Cloud giant AWS is developing:

Businesses in the retail industry have an opportunity to improve customer service by using artificial intelligence (AI) chatbots. These solutions on AWS offer solutions for chatbots that are capable of natural language processing, which helps them better analyse human speech.

By implementing these AI chatbots on their websites, businesses can decrease response times and create a better customer experience while improving operational efficiency.

Lastly, from a sales and business development point of view, having AI enhanced tools to generate less cold call intros and help with following up with more personalised and less generic emails is also a compelling application of AI in the lead and pipeline generation space.

With increasingly leaner SDR and BDR teams, it is often difficult for companies, especially start-ups, to get sales staff up to speed on complex IT solutions. But having a product matter expert involved in the modelling of AI algorithms that can enhance conversational email and follow up allows the BDR/SDR not only achieve the right messaging but also allows them to manage and communicate with more potential leads.

As a species, humans have always seemingly developed tools, products, medicines, industries, and societal frameworks that have the potential either help or harm society. But more recently we’ve really accelerated the ability for our tools to impact our world for better or worse.

But often, we are too distracted by the “wow isn’t that cool” part of technology before we think: Should we be doing this? What are the down sides? Who is regulating this? What are the long-term impacts. Can we stop it if we have to?

AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research and acknowledged by top AI labs. As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.

Pause Giant AI Experiments: An Open Letter

And while we marvel at dancing robotic dogs from Boston Dynamics or AI infused technology into computer generated imagery and text there are many leading tech and industrial figures that are worried of the implications of rapidly evolving, unregulated AI upon businesses and society at large. They are very worried.

Before his death, eminent physicist, Stephen Hawking, forewarned that AI could help to end the human race. And in March this year, Elon Musk, who has started his own AI company and a whole host of tech leaders put their signatures to an open letter to the Tech industry to “pause giant AI experiments” for at least 6 months and not surpass any technology that exceeds frameworks like ChatGPT 4. The letter at the time of writing had nearly 30K signatures.

In the letter it said:”

“AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research and acknowledged by top AI labs. As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.

“Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control.”

And although many play down this threat by assuming we can switch the machines off, there are others who think that if the core ethics and principles of AI are not agreed, implemented and policed now, the ability for ‘bad actors’ to exploit this technology for serious societal damage is very real (see Ugly, below)

For Musk, the proposal is simple. Using his experience of the regulation that is seen in the automotive and aeronautical business, he believes the necessity to have the same regulatory and oversight is badly needed now. For Musk and others, the sheer speed of AI development is currently ‘out of control’. And in a climate where institutions and governments are attempting to root out online disinformation, propaganda and other influencing material, a next breed of generative AI that can take a combination of text, picture and video ‘deepfakery’ could make this process extremely difficult.

This view is shared by Dr. Geoffrey Hinton who dramatically quit his job at Google recently warning the dangers of AI. Widely seen as a pioneer or ‘the Godfather’ of AI, Hinton and his research team at the University of Toronto in 2012, developed the deep learning capabilities and neural networks that are the foundation of the current systems like Chat GPT.

Speaking to the New York Times, Hinton said he quit his job so he could ‘speak freely’ about the risks of AI and that he now ‘regrets his life’s work.”

In an interview with the BBC, Hinton explained that the digital intelligence that is being developed is different to biological intelligence in that individual AI “copies” can learn separately but share instantly similar to a hive mind or collective, that will quickly eclipse average human intelligence.

Although, Hinton believes that AI is currently not ‘good at reasoning,’ he expects this to “get better quite quickly”, And although he currently doesn’t think that AI becoming sentient or heading to a Terminator style future is the main concern, he is concerned about AI’s effect to:

John Wannamaker pioneered the practice of marketing throughout the 19th century and early 20th century. Since then, companies have been investing in marketing and advertising to understand consumer preferences and influences. With TV ratings being measured since the 1940’s and the rise of marketing agencies in the 1950’s, the marketing industry now generates billions of dollars annually.

It now seems everyone wants to know not just what you watch or what brands you like, but who your friends are, where you go and other personal data that can be stored, analysed, and used or sold to those that are interested. Combined with increasing surveillance through signals intelligence and physical surveillance like CCTV and embedded cameras in devices, this creates a troubling prospect of mass surveillance, narrative manipulation, reduced freedoms, and increased central control for those who are aware of these developments.

As the Internet Age became more prevalent in the mid 1990s, most people certainly in the Western hemisphere, were happy to share some personal information in return for more personalised online experience or relevancy for advertising for things we might want or need. The bad actors, we were told back then, were those criminal gangs that operate on the dark web and broker personal information from cyber breaches to try and set up scams and other fraudulent activity.

Back then, not many people were fully aware or willing to admit that it wasn’t just the bad actors that were looking to track your online footprint and preferences, it was also governments around the world or more accurately government departments such as the National Security Administration (NSA) in the US that, that were increasingly interested in what you are up to! And these departments have little oversight by elected officials in the US Senate or Congress

And it wasn’t until people such as Julian Assange of Wikileaks and former National Security NSA contractor and whistle-blower Edward Snowden made us aware of some unethical online digital practices by our governments that we started to be more aware that the cool tech we were becoming more reliant on was increasingly being used not just for anti-terrorism or national security measures, but just to keep tabs on people’s everyday activity, conversations and online networks. It was Snowden that revealed cameras and microphones contained within certain devices can be activated without the user’s knowledge and used to monitor digital footprints, keywords and other activity. Speaking to the Guardian Snowden, said that the NSA’s activity is “….intent on making every conversation and every form of behaviour in the world known to them”.

“What they’re doing” poses “an existential threat to democracy”, Snowden said.

Even in “flight mode” or switched off, your indispensable tech is recording, surveilling and feeding back zettabytes of data into remote and often unregulated servers when they are reconnected to internet that are increasingly using artificial intelligence to conduct predictive modelling and other learnings.

And while this practice we are again told is to prevent terrorism or help curtail the spread of pandemics, increasingly, personal biometric and health information is being gathered and monitored in vast quantities and being analysed alongside individual consumer practices and sold on.

Even personal movement, either on foot or by public transport is being captured, analysed and stored with so called health trackers on watches and phones and by automatic number plate recognition (ANP).

In fact, most all private companies these days have entered the “spy business,” in one way or another according to J. B. Shurk of international policy council and think tank, The Gatestone Institution.

And this information is increasingly being bought by governments around the world, to track and monitor the activities and movements of their citizens, according to Ross, Muscato of the Epoch Times who notes that the chair of the House Committee on Energy and Commerce, US Rep. Cathy McMorris Rodgers confirmed that US State and federal governments regularly purchase Americans' personal data from private companies, so that they may "spy on and track the activities of U.S. citizens.”

"No kind of personal information is off-limits. Government agents use data brokers to collect information on an individual's GPS location, mobile phone movements, medical prescriptions, religious affiliations, sexual practices, and much more.”

Muscato also quotes findings from US Senator, Rand Paul that discovered at least 12 overlapping Department of Homeland Security (DHS) programmes that were tracking what US citizens were saying online and that they were targeting children to ‘report their own family for “disinformation” if they disagreed or had counter information about the official Covid narrative.

And it is perhaps these current “hand-cranked” measures used by agencies that could be incredibly enhanced via AI that people such as Elon Musk and Dr. Hinton are referring to when they voice concerns over the rapid, unregulated development of AI especially if it were weaponised into legislation such as the controversial US Restrict Act that could give power to AI to track millions of pieces of communication and mislabel it as dangerous, extremism or hate speech and then take further action on that individual.

So as we enter the summer months, why don’t we take a 6 month breather, not to stop what is currently in development or explore the benefits of AI to our current business needs and challenges but to also debate in a non-partisan way a technology that has tremendous potential for good, but also discuss if not regulated and controlled, we could be in a scenario where we can’t put the genie back in the bottle. For more details of how the industry may make policies during a pause please visit here:

2. https://www.reuters.com/technology/tech-ceos-wax-poetic-ai-big-adds-sales-will-take-time-2023-04-26/

3. https://www.theverge.com/2023/3/16/23642833/microsoft-365-ai-copilot-word-outlook-teams

6. https://futureoflife.org/wp-content/uploads/2023/04/FLI_Policymaking_In_The_Pause.pdf

7. https://futureoflife.org/ai/benefits-risks-of-artificial-intelligence/

8. Issac Asimov’s Law of Robots

In today’s hyperconnected world, AI has revolutionized the way we work. But as more and more information and data flows seamlessly, cyberthreats are becoming ever-more sophisticated and traditional cybersecurity measures are no longer sufficient.

Cyberattacks are on the rise, and although on one hand, threats have become more sophisticated due to advancements in AI and Machine Learning (ML), AI has also become an indispensable tool in cybersecurity. This is due to its ability to analyse vast amounts of data, detect anomalies, and adapt to evolving threats in real-time.

Artificial Intelligence (AI), Machine Learning (ML), and Deep Learning (DL) have multiple applications in cybersecurity to make threat detection and prevention strategies more robust and effective. Some benefits of their use include:

■ Phishing detection and prevention

AI powered algorithms can detect email phishing by analysing vast amount of data to thwart phishing attacks. ML models can be trained to flag suspicious details of the mail such as the sender’s address, links and attachments and the language used in the message to determine if it is from a trustworthy source or not.

■ Password protection

Don’t miss out! To continue reading this article become a Knowledge Exchange member for free and unlimited access to Knowledge Exchange content and key IT trends and insights.

AI and ML can detect trends in compromised passwords to create rigid password policies and suggest stronger passwords that are harder for hackers to crack. AI-powered systems can also use behavioural biometrics to verify user identities, analysing typing patterns, mouse movements and other cues to detect potential imposters.

■ Network security

By analysing data and patterns, Artificial Intelligence algorithms can learn normal network behaviour and flag any changes that may signal sinister activity. AI can also be used in identifying and moderating Distributed Denial of Service (DDoS) attacks by analysing traffic pattern and blocking untrustworthy IP addresses.

■ Vulnerability Management

With more than 20,000 known vulnerabilities, AI and Machine Learning can be vital tools for detecting anomalies in user accounts, endpoint and servers that can signal a zero-day unknown attack, which can protect companies from vulnerabilities before they have even been reported or patched.

While AI has a welcome place in technology, its development should be kept under an ethical watchful eye because as cybersecurity professionals use it to strengthen their defences, cybercriminals also have access to the same technology to create more sophisticated attacks.

However, it cannot be denied that by implementing AI in your cybersecurity strategy, you can significantly improve your defence against and detection of potential threats.